![]()

See this visualization first on the Voronoi app.

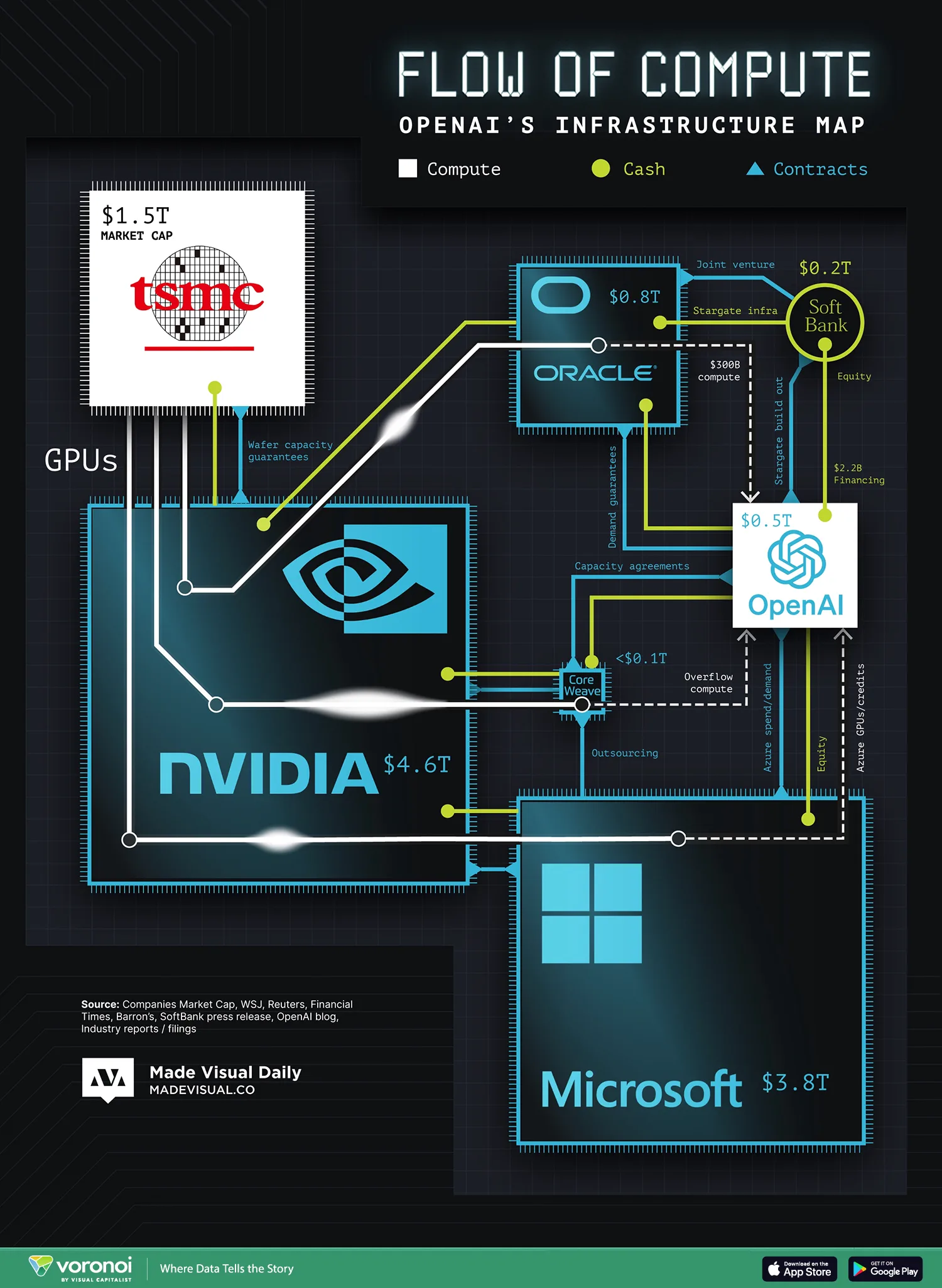

Mapped: The Compute, Cash, and Contracts that Power OpenAI

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

- OpenAI’s infrastructure relies on a complex web of GPU supply, corporate partnerships, and vast amounts of capital.

- A “closed loop” of financial deals between AI companies and chipmakers has triggered warnings of a potential bubble.

In order to train and deploy cutting-edge AI models like ChatGPT, OpenAI relies on a sprawling infrastructure network involving multiple billion-dollar entities, intricate contracts, and vast capital commitments. A new visualization from Made Visual Daily maps this infrastructure pipeline using three flows—compute, cash, and contracts—highlighting the increasingly circular nature of AI development funding.

The map synthesizes data from public financial reports, media disclosures, and filings in an attempt to show who builds what, who pays whom, and where potential risk may be accumulating in the system.

The biggest nodes in the diagram are familiar names: Nvidia ($4.6 trillion), Microsoft ($3.8 trillion), TSMC ($1.5 trillion), and Oracle ($0.8 trillion). OpenAI itself, valued at around $500B in its most recent secondary sale, anchors the middle of the chart. Microsoft, in particular, plays a dual role—both providing compute (via Azure) and injecting capital and GPU credits back into OpenAI.

The GPU Supply Chain: Scarcity, Dominance, and Dependency

The engine behind OpenAI—and much of today’s generative AI—is the Nvidia GPU.

But these chips don’t come out of thin air. The GPU supply chain is global and fragile:

- Design: Nvidia designs the chips in-house.

- Fabrication: TSMC (Taiwan Semiconductor Manufacturing Company) fabricates the chips at its advanced 5nm and 4nm nodes.

- Assembly: The chips are then packaged and tested by firms like Quanta and Foxconn.

- Deployment: Server makers such as Supermicro integrate them into AI-optimized racks and clusters.

- Delivery: These clusters are shipped to cloud providers like Microsoft Azure and CoreWeave.

Any disruption along this chain—whether geopolitical, economic, or logistical—can send shockwaves through the entire AI sector. That’s why the U.S. has placed tight export controls on AI chips, and why countries like China are scrambling to develop domestic alternatives.

Demand for H100s has grown so intense that cloud firms and startups alike are reserving capacity months or even years in advance. In rare cases, some even use GPUs as collateral to secure financing, reinforcing their role as a new strategic commodity.

Closed-Loop Capital and the AI Bubble Risk

What makes the modern AI ecosystem remarkable isn’t just the number of players involved—it’s how deeply interwoven their financial and operational relationships have become.

Microsoft, for instance, has invested over $13 billion in OpenAI, while also serving as its primary cloud and compute partner through Azure. Much of OpenAI’s model training runs on clusters powered by Nvidia GPUs, procured via Microsoft’s cloud infrastructure.

At the same time, Microsoft is the primary customer of CoreWeave, a rapidly growing cloud provider that also buys large volumes of Nvidia hardware—often financed through credit arrangements with private investors and funds.

This creates an interdependent web of capital, compute, and contracts, where the same dollars and chips circulate between a handful of firms dominating AI’s supply chain. Analysts have noted that such tight coupling could magnify shocks if demand or funding conditions change abruptly.

Learn More on the Voronoi App ![]()

To dig deeper into the relationship between OpenAI and its backers, explore our related post: OpenAI vs Big Tech.