![]()

See this visualization first on the Voronoi app.

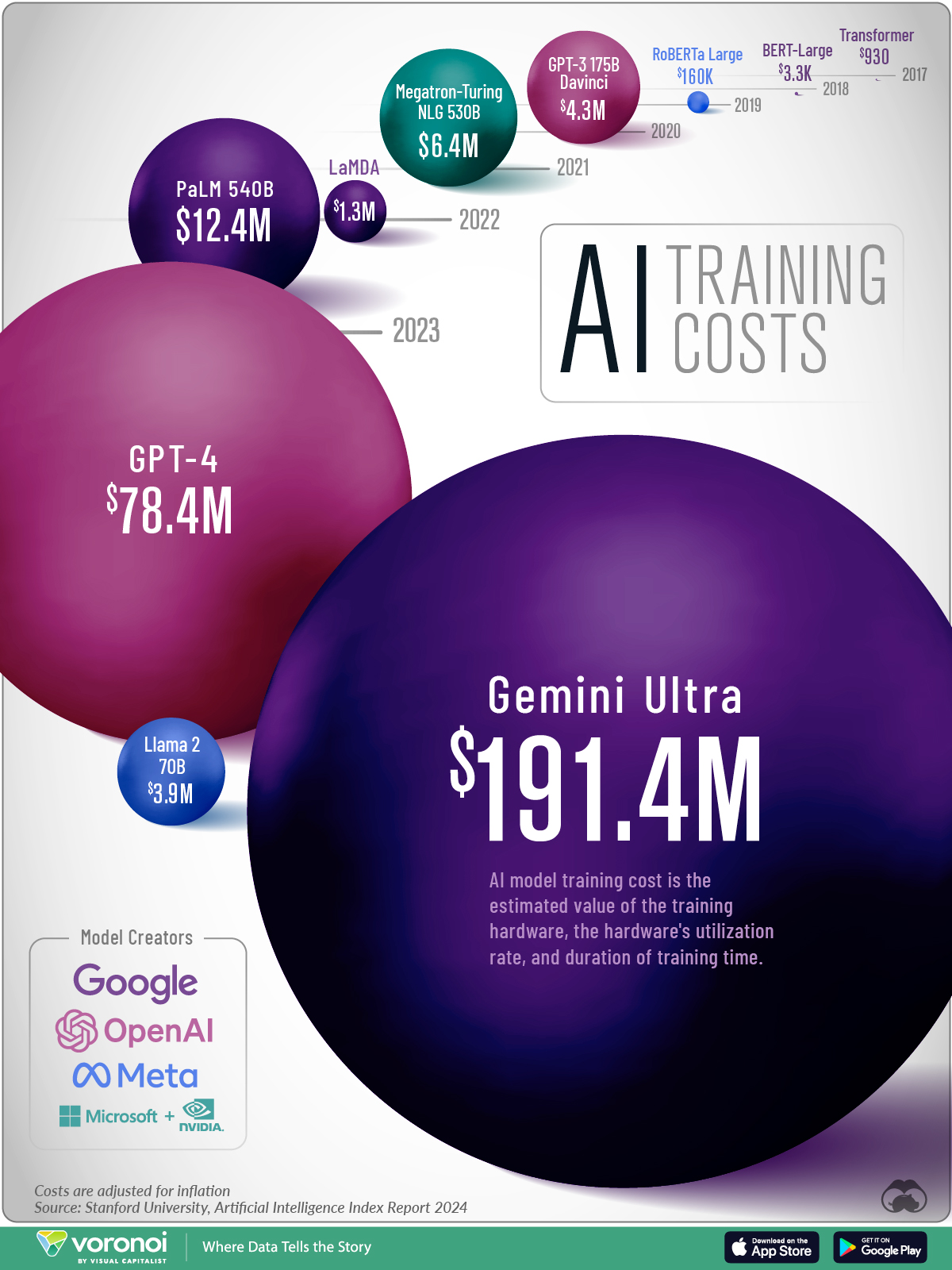

Visualizing the Training Costs of AI Models Over Time

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

Training advanced AI models like OpenAI’s ChatGPT and Google’s Gemini Ultra requires millions of dollars, with costs escalating rapidly.

As computational demands increase, the expenses for the computing power necessary to train them are soaring. In response, AI companies are rethinking how they train generative AI systems. In many cases, these include strategies to reduce computational costs given current growth trajectories.

This graphic shows the surge in training costs for advanced AI models, based on analysis from Stanford University’s 2024 Artificial Intelligence Index Report.

How Training Cost is Determined

The AI Index collaborated with research firm Epoch AI to estimate AI model training costs, which were based on cloud compute rental prices. Key factors that were analyzed include the model’s training duration, the hardware’s utilization rate, and the value of the training hardware.

While many have speculated that training AI models has become increasingly costly, there is a lack of comprehensive data supporting these claims. The AI Index is one of the rare sources for these estimates.

Ballooning Training Costs

Below, we show the training cost of major AI models, adjusted for inflation, since 2017:

| Year | Model Name | Model Creators/Contributors | Training Cost (USD)Inflation-adjusted |

|---|---|---|---|

| 2017 | Transformer | $930 | |

| 2018 | BERT-Large | $3,288 | |

| 2019 | RoBERTa Large | Meta | $160,018 |

| 2020 | GPT-3 175B (davinci) | OpenAI | $4,324,883 |

| 2021 | Megatron-Turing NLG 530B | Microsoft/NVIDIA | $6,405,653 |

| 2022 | LaMDA | $1,319,586 | |

| 2022 | PaLM (540B) | $12,389,056 | |

| 2023 | GPT-4 | OpenAI | $78,352,034 |

| 2023 | Llama 2 70B | Meta | $3,931,897 |

| 2023 | Gemini Ultra | $191,400,000 |

Last year, OpenAI’s GPT-4 cost an estimated $78.4 million to train, a steep rise from Google’s PaLM (540B) model, which cost $12.4 million just a year earlier.

For perspective, the training cost for Transformer, an early AI model developed in 2017, was $930. This model plays a foundational role in shaping the architecture of many large language models used today.

Google’s AI model, Gemini Ultra, costs even more, at a staggering $191 million. As of early 2024, the model outperforms GPT-4 on several metrics, most notably across the Massive Multitask Language Understanding (MMLU) benchmark. This benchmark serves as a crucial yardstick for gauging the capabilities of large language models. For instance, its known for evaluating knowledge and problem solving proficiency across 57 subject areas.

Training Future AI Models

Given these challenges, AI companies are finding new solutions for training language models to combat rising costs.

These include a number of approaches, such as creating smaller models that are designed to perform specific tasks. Other companies are experimenting with creating their own, synthetic data to feed into AI systems. However, a clear breakthrough is yet to be seen.

Today, AI models using synthetic data have shown to produce nonsense when asked with certain prompts, triggering what is referred to as “model collapse”.

The post Visualizing the Training Costs of AI Models Over Time appeared first on Visual Capitalist.